3 Things That Matter When Building A RAG Pipeline

Issue #2 - Build AI With Me

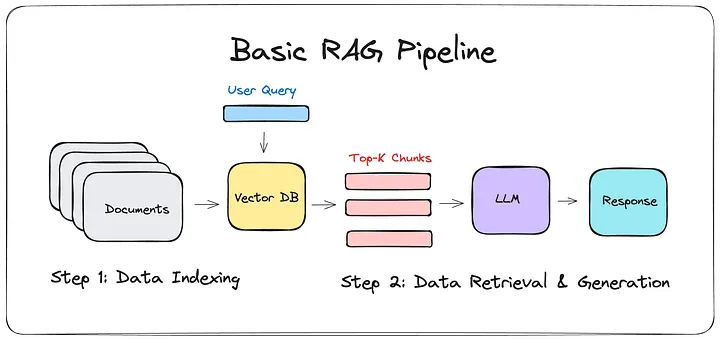

Model selection is only part of the equation (covered in the last blog). The real magic of a RAG system happens in the details: how you chunk your data, format your prompts, structure your retrieval, and evaluate your results. Here’s everything I learned about some of these tweaks you can make.

Data Chunking Strategy

Your retriever can only find what you’ve chunked. If your chunks are too large, you’ll retrieve irrelevant information. Too small, and you’ll lose critical context.

Strategy 1: Fixed-Size Chunking

# Simple fixed-size chunks

chunk_size = 512 # tokens

overlap = 0

chunks = [text[i:i+chunk_size] for i in range(0, len(text), chunk_size)]When this works:

Highly structured data (databases, tables)

When every section is independent

Technical documentation with clear sections

Strategy 2: Fixed-Size with Overlap

chunk_size = 512

overlap = 50 # tokens

chunks = []

for i in range(0, len(text), chunk_size - overlap):

chunk = text[i:i+chunk_size]

chunks.append(chunk)Trade-offs:

✅ Better context preservation

✅ More forgiving if retrieval misses optimal chunk

❌ Increased storage (chunks overlap)

❌ Potential redundancy in retrieved context

Strategy 3: Semantic Chunking

# Split by semantic boundaries (paragraphs, sections, topics)

# Using sentence transformers to find natural breakpoints

from sentence_transformers import SentenceTransformer

import numpy as np

def semantic_chunking(text, similarity_threshold=0.7):

sentences = text.split(’. ‘)

embeddings = model.encode(sentences)

chunks = []

current_chunk = [sentences[0]]

for i in range(1, len(sentences)):

similarity = cosine_similarity(

embeddings[i-1],

embeddings[i]

)

if similarity > similarity_threshold:

current_chunk.append(sentences[i])

else:

chunks.append(’. ‘.join(current_chunk))

current_chunk = [sentences[i]]

return chunksBest context preservation, but more complex to implement and tune.

When this works:

Long-form content (articles, reports, books)

Narrative or flowing text

When topic shifts matter

Recommendations

The chunk size should match your typical query complexity.

If users ask simple factual questions → smaller chunks (256-384 tokens) If users ask complex analytical questions → larger chunks (512-1024 tokens)

I discovered this by analyzing my queries: most review analysis questions needed 2-3 data points to answer properly, so 512-token chunks with overlap worked perfectly.

Data Format Alignment

Think of it this way: LLMs are pattern-matching machines trained on internet text. When they see familiar patterns, they “know” what comes next and how to interpret the information.

If your data format resembles what the model saw during training, you’re essentially speaking its native language. If it doesn’t, you’re making the model work harder to understand what you’re asking for. The closer your chunked data matches these formats, the better the model performs.

For example,

Look at the 2 ways the customer review data is formatted here.

# Poor formatting

chunk = “Great product. Fast shipping. Would buy again.”

# Better formatting (mimics review structure)

chunk = “”“

Review: 5 stars

Product: XYZ Widget

Pros: Great quality, fast shipping, good value

Summary: Customer highly recommends and would purchase again.

“”“Why this works: The structured format matched how product reviews appear in the model’s training data. The model recognizes the pattern and extracts information more accurately.

Prompt Engineering

Every model is tuned differently. What works for GPT-4 might fail for Llama. Here’s what I learned. Here are some classic principles of prompt engineering:

Be explicit about constraints

“Use only the provided context”

“Do not make assumptions.”

“If information is missing, state it.”

Define the role clearly

“You are a technical support agent.”

“You are analyzing financial report.s”

“You are a medical information assistant.”

Specify output format

“Provide your answer in this format:

- Summary: [one sentence]

- Details: [2-3 bullet points]

- Confidence: [High/Medium/Low]”Also, different models respond to different prompt styles.

Llama models: Like structured, explicit instructions

“Based on the following context, provide a detailed answer...”Flan-T5: Prefers concise, imperative prompts

“Answer: {query}\nContext: {context}”GPT models: Handle conversational, nuanced prompts well

“Given the context below, I need you to...” Final Thoughts

Trial and Error Beats All Other Learning

No guide can tell you exactly what will work for your specific:

Domain (medical vs. customer reviews vs. legal documents)

Data format (structured vs. unstructured)

Query types (factual vs. analytical vs. comparative)

Hardware constraints

Let me know if this helps, and I’m curious to hear what are tweaks you make that help you get better results!